TLDR: Neural networks are cool. I trained a recurrent neural network on a list of around 25000 species names and made it generate its own, then built a Twitter bot that tweets one out every hour. The results are kinda funny.

A plant. Family: Asteraceae. Species: Scrophium wanterfoxamis. Common names: spice-gooffa or roudshead bedneria.

— Undiscovered Species (@undisc_species) April 10, 2017

I’ve always been interested in the application of artificial intelligence techniques to ecology. There’s huge potential and some very low hanging fruit in the use of machine learning to make predictions about species distribution and abundance, as well as a whole list of other things like evolutionary processes and taxonomic classification. Generative neural networks can sometimes also produce some surprising and funny results. I see it as a form of uncanny valley, where the results produced are similar enough to be plausible, but strange enough to cause a moment of cognitive disconnect. Koans in AI generated text. These texts give insight into our own lingual and syntactic abilities. What is normal language and what are the rules by which we produce and recognise it? Why is it so funny when those rules are broken?

I was recently inspired and amused after coming across an article on Janelle Shane’s blog, in which she lists recipe names generated by a recurrent neural network trained on a corpus of about 30 000 real recipe titles. Janelle has also turned her neural network to several other text sources, including Irish folk songs, knock knock jokes, Pokemon names, and full recipes themselves, all with hilarious results. I decided that I would take up the torch (you’ll get the pun in a minute) and have a go at producing a neural network capable of generating plausible species names.

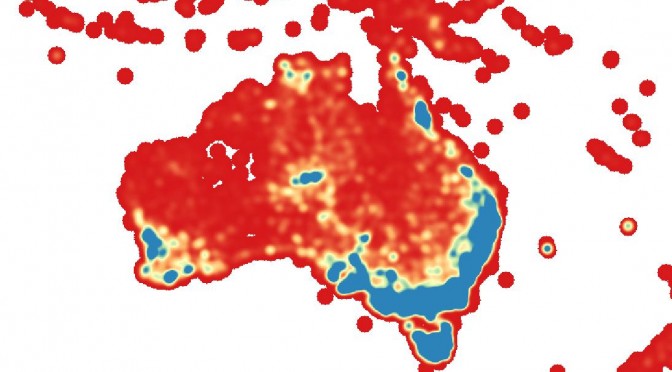

Firstly, I needed to gather a training dataset. In order to broaden the appeal and increase the comedic value of the output, I decided producing common names for each species was important, so that meant I needed a dataset of species that had common names. This was a little hard to find. In the end I cobbled together lists of around 12600 animals and 14700 plants from various online datasources including the Atlas of Living Australia and the Global Biodiversity Information Facility. For each species I included the family name, the species binomial, and a list of common names. I kept the plants and the animals separate. The animals dataset was quite heavy in marine creatures, which is visible in the output – the network generates a lot of eels, fish, and crabs.

An animal. Family: Terranidae. Species: Notodus aectris. Common names: Bigeye Sand-hose Fish or Terrheye Hardfish.

— Undiscovered Species (@undisc_species) April 14, 2017

So how’d I actually do it? And what is ‘training’ a neural network? There’s a very good explanation of how recurrent neural networks work here, although that may be a little technical for most. The simple explanation (and this is about the extent to which I understand it properly, so feel free to update or add to my knowledge in the comments) is this: The neural network looks at each character in the source text at a time, and it makes a guess about what character will come next based on all the previous characters that it has read. Then it checks that next character, and updates its model according to whether it guessed correctly or not. The network doesn’t know anything about english; it doesn’t know anything about the subject matter; it just sees each character as a vector within a probabilistic space and it builds a model around those probabilities. How do you actually train it? That part is pretty simple, thanks to the great tools that have been built in this space over the last few years.

I used torch-rnn, a recurrent neural network package for the Torch (there’s the pun!) scientific computing framework. I followed the excellent guide here, and while I had to dive into Github issues a couple of times to solve installation glitches and even had to modify the source code to run on my machine, I got it up and going within an hour or so. Training took a while on my GPU-less MacBook Air – around 12 hours each for the animal and plant datasets. At the end of that process I issued commands that asked the neural network to generate sets of species names based on the animal and plant models it had developed. The output of these went into a text file ready for my Twitter bot to tweet. I generated enough to keep the bot going for around a year at one tweet per hour.

The results don’t quite have the comedic value of Janelle Shane’s recipe titles, but biologists might find them amusing, and it is really interesting how the AI has learned many of the rules of species naming – that plant families should end with -aceae, and animals with -dae, and that species names should have a ‘latin’ feel to them. In many cases it even used real family names, and sometimes genus names, I guess because there are few enough of them that it could learn that the whole word was commonly used. It learned that species names should be in two parts, and that common names often include hyphens, possessives, and terms like ‘weed’, or might end with ‘fish’ or ‘rose’. Of course, there are many times it gets those things wrong too – sometimes producing a family-like name in place of a species name, which resulted in the bot tweeting a species-like name in place of the common names, or else just combining things in some unrecognisable fashion.

A plant. Family: Poaceae. Species: Archidoxicus alesticum. Common names: tooadokn Afrys banger-cherry, black iris, or broommine hawty-glaup.

— Undiscovered Species (@undisc_species) April 21, 2017

The bot itself is pretty standard; using the Tweepy library really makes it easy to set up a Twitter bot. It runs on my Raspberry Pi, and is triggered by a cron job every hour.

Follow Undiscovered Species on Twitter to keep up with the names.